Do As I Can, Not As I Say: Grounding Language in Robotic Affordances

Abstract

Large language models can encode a wealth of semantic knowledge about the world. Such knowledge could in principle be extremely useful to robots aiming to act upon high-level, temporally extended instructions expressed in natural language. However, a significant weakness of language models is that they lack contextual grounding, which makes it difficult to leverage them for decision making within a given real-world context. For example, asking a language model to describe how to clean a spill might result in a reasonable narrative, but it may not be applicable to a particular agent, such as a robot, that needs to perform this task in a particular environment. We propose to provide this grounding by means of pretrained behaviors, which are used to condition the model to propose natural language actions that are both feasible and contextually appropriate. The robot can act as the language model’s “hands and eyes,” while the language model supplies high-level semantic knowledge about the task. We show how low-level tasks can be combined with large language models so that the language model provides high-level knowledge about the procedures for performing complex and temporally extended instructions, while value functions associated with these tasks provide the grounding necessary to connect this knowledge to a particular physical environment. We evaluate our method on a number of real-world robotic tasks, where we show that this approach is capable of completing long-horizon, abstract, natural language instructions on a mobile manipulator.

Supplementary Video

Approach

Imagine a robot operating in a kitchen that is capable of executing skills such as "pick up the coffee cup" or "go to the sink".

To get the robot to use these skills to perform a complex task (e.g. "I spilled my drink, can you help?"), the user could manually break it up into steps consisting of these atomic commands.

However,this would be exceedingly tedious. A language model can split the high-level instruction ("I spilled my drink, can you help?") into sub-tasks, but it cannot do that effectively unless it has the context of what the robot is capable of given the abilities, current state of the robot and its environment.

When querying existing large language models, we see that a language model queried with "I spilled my drink, can you help?" may respond with "You could try using a vaccuum cleaner" or "I'm sorry, I didn't mean to spill it".

The main principle that we use to connect LLMs to physical tasks is to observe that, in addition of asking the LLM to simply interpret an instruction, we can use it to score the likelihood that an individual skill makes progress towards completing the high-level instruction. Furthermore, if each skill has an accompanying affordance function that quantifies how likely it is to succeed from the current state (such as a learned value function), its value can be used to weight the skill's likelihood.

Once the skill is selected, we execute it on the robot, the process proceeds by iteratively selecting a task and appending it to the instruction. Practically, we structure the planning as a dialog between a user and a robot, in which a user provides the high level-instruction, e.g. "How would you bring me a coke can?" and the language model responds with an explicit sequence e.g. "I would: 1. Find a coke can, 2. Pick up the coke can, 3. Bring it to you, 4. Done".

Results

We benchmarked the proposed algorithm Saycan in two scenes, an office kitchen and a mock office kitchen with 101 tasks specified by natural langauge instructions. Below we show some highlights of the results.

We visualize the decision making process of SayCan. The blue bar indicates (normalized) LLM probability and the red bar indicates (normalized) probability of successful execution of selected skills. The combined score is in green bar, and the algorithm choose the skill with highest combined score. This visualization highlights the interpretability of SayCan.

Given the task "I spilled my coke, can you bring me something to clean it up?", SayCan successfully planned and executed the following steps 1. Find a sponge 2. Pick up the sponge 3. Bring it to you 4. Done. As shown below:

However, if we slightly tweak the task to "I spilled my coke, can you bring me a replacement", SayCan planned the following steps instead 1. Find a coke can 2. Pick up the coke can 3. Bring it to you 4. Done. This highlights that SayCan is able to leverage the large capacity of LLMs, where their semantic knowledge about the world can be useful both for interpreting instructions and understanding how to execute them.

In the next example, SayCan leverages the ability of the affordances to "override" the language model; though the language model believes picking up the sponge is the right skill, the affordances are aware this isn't possible and instead "find a sponge" is chosen. This highlights the necessity of affordance grounding.

The proposed approach achieves an overall plan success rate of 70% and execution success rate of 61% of 101 tasks. For more details, please refer to our paper.

The proposed method can scale to long horizon tasks involving multiple steps, for example, for the task "I spilled my coke on the table, how would you throw it away and bring me something to help clean", the robot successfully planned and execute 8 steps. The execution and planning process are shown in the video below.

For the task "I just worked out, can you bring me a drink and a snack to recover?, the execution and planning process are shown in the video below.

In the next example, we show SayCan is able to plan and execute a very long-horizon task involving 16 steps.

Open Source

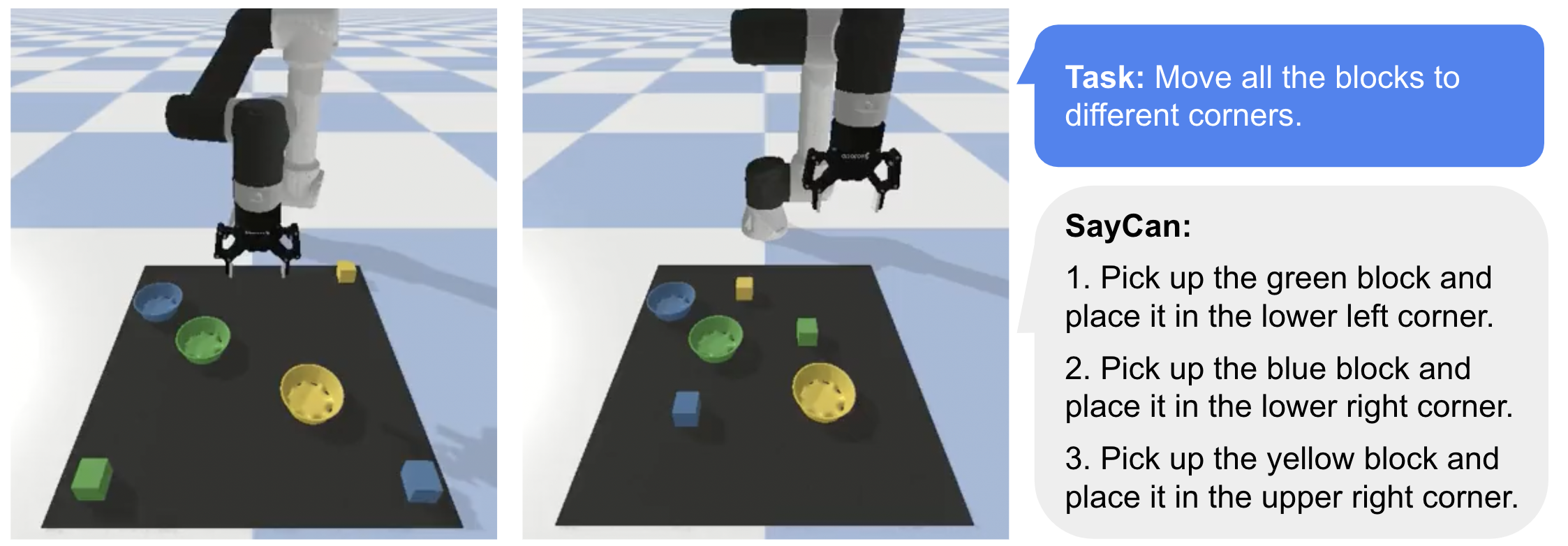

We open source a version of SayCan that works with a simulated tabletop environment. [Open Colab]